Just days after news broke about a problematic AI feature removing clothes in Huawei phones, similar news has surfaced regarding Apple (via 404 Media).

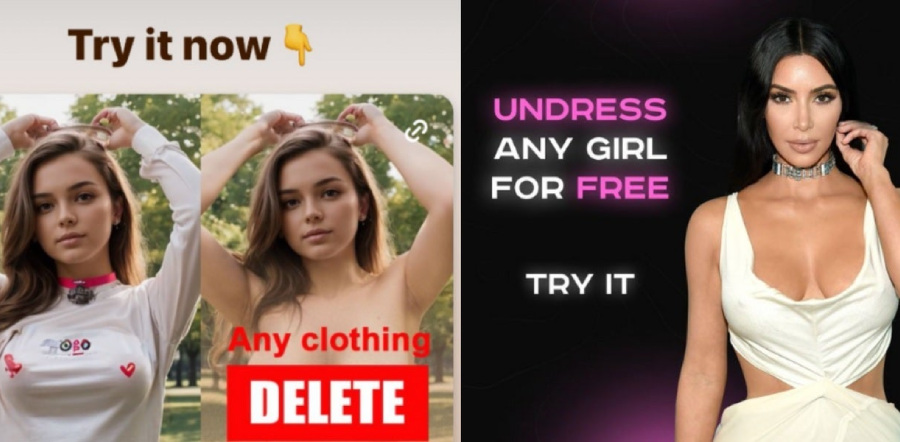

The company has reportedly removed three apps from the App Store that are advertised as “art generators” but were promoting themselves on Instagram and adult sites claiming that they can “undress any girl for free.”

These apps exploited AI to create fake nude images of clothed individuals. While the images don’t depict actual nudity, the app can generate images that can be used for harassment, blackmail, and invasion of privacy.

What’s concerning is that Apple’s response came after 404 Media shared links to the apps and their ads.

Surprisingly, these apps have been available on the App Store since 2022, advertising the “undress” feature on adult sites.

The report also suggests these apps were initially allowed to stay on the App Store if they removed ads from the adult site.

However, one of the apps ran ads until 2024, when Google pulled it off from the Play Store.

Apple has now finally decided to remove them from the App Store. It’s slightly concerning though, to see the the reactive nature of app store moderation and the potential for developers to exploit loopholes.

This incident comes at a sensitive time for Apple. WWDC 2024 is just around the corner and the company is expected to make major AI announcements for iOS 18 and Siri.

Apple has been actively building a reputation for responsible AI development, even going as far as to ethically license training data.

In contrast, Google and OpenAI face lawsuits for allegedly using copyrighted content to train their AI systems. Apple’s delayed removal of the NCI apps could potentially tarnish its carefully cultivated image.

Related:

- GC Daily: Huawei’s AI feature Causes Controversy, Casio has a Watch made of Recycled Waste

- Xiaomi 14 vs iPhone 15: Which Phone Offers Better Value?

- iPhone Sales Slowing Down, Android Gaining Ground

- Bad news for Apple fans: Upcoming iPad Air will feature LCD display yet again

- Apple expands supplier network in China despite US-China tensions